Episode #158: AI-Assisted Toy Design: Revolutionizing The Toy And Game Industry

Listen Now

Tap Play Below or Listen On Apple Podcasts | Spotify | Google Podcasts

I bet that YOU can generate a high resolution concept image of your toy idea for free, and in seconds. The use of AI art exploded in recent months, with the search term “AI art” spiking in the US this past December. The breakout AI tools have been Midjourney, Lensa, and DALL-E. Since then, there has been considerable and understandable backlash against AI art from real human artists across the globe. In this episode we explore three things, 1) how AI can help the development process of your toy or game, 2) why some artists are frustrated by the growing popularity of ai art, and 3) what legal ramifications using art generated by AI could pose.

As the golden age of AI art seems to be just on the horizon, one of my favorite quotes by Tom Waits seems to be challenged. “ Fast, Cheap, and Good… pick two. If it’s fast and cheap it won’t be good. If it’s cheap and good, it won’t be fast. If it’s fast and good, it won’t be cheap.” It seems that at least for now AI art can deliver fast, cheap, and good work. Some might argue the “good” is debatable, but out of that trifecta, I think it’s the affordability of AI art of the future that we really have to worry about. What use is a free AI generated image, if you can’t use it because it infringes on the copyright of an existing artist? Find out the answer to that question and more in this podcast episode.

⭐️ Check out ALL of the images I generated through AI and mentioned in this episode by clicking here. ⭐️

Children’s Book Spreads & Toy Designs Generated By The Toy Coach via DALLE-2

Toy Designs Generated By The Toy Coach via MIDJOURNEY

EPISODE CLIFF NOTES

Learn the 3 popular AI tools of today, Midjourney, Dalle, and Lensa. [3:28]

Find out why developers consider AI to be creating unique images and why artists disagree. [10:42]

Hear how I prompted Midjourney AI to design an actual toy! [17:21]

Curious if AI art can help you develop a children’s book? Maybe not entirely, but find out what it can do for your composition. [30:56]

Why you may not be able to use the images you generate. [39:12]

Find out how independent board game designers are making use of AI art. [44:26]

-

This episode is brought to you by www.thetoycoach.com

Listen to this episode: #63: How To Make A Toy, The 5 Steps of Product Development

Download the Toy Development Checklist by clicking here.

Midjourney AI Art Generator and Read Midjourney Terms of Service

DALL-E AI Art Generator and Read DALLE-E/Open AI Terms

Lensa App on Google Play and Apple Store

Support Hissy Fit Game on Kickstarter learn more here. -

[00:00:00] Azhelle Wade: You are listening to Making It in The Toy Industry, episode number 158.

[00:00:05] Hey, there are toy people, Azhelle Wade here and welcome to a new year. Welcome to 2023, and welcome back to another episode of the Toy Coach Podcast, Making It In The Toy Industry. This is a weekly podcast brought to you by thetoycoach.com.

[00:00:34] We're kicking off the new year with a solo podcast episode, and today's podcast episode has been in the works for months. It was almost ready in December. But with everything going on between toy shows, holidays, and a ton of travel, I was worried that releasing this episode at the tail end of 2022 wouldn't get at the visibility that I knew it deserved.

[00:00:55] So here we are kicking off January, 2023 with an episode that I'm sure is gonna spark conversation, but one that I'm really excited to share with you. Today we're going to talk about how you can use AI to generate high resolution concept images of your toy idea for free today. And in seconds for real. There's this quote by Tom Waits that I was reminded of while researching and developing this episode.

[00:01:28] And this quote is, is this: fast, cheap, and good. Pick two. If it's fast and cheap, it won't be good. If it's cheap and good, it won't be fast. If it's fast and good, it won't be cheap. So fast, cheap, and good. Pick two words to live by. But there I say, with AI art generators , at least right now, it seems like we can have all three, but it's highly likely that it won't stay that way and we won't have this trifecta forever.

[00:01:57] But while we do have access to cheap, good and fast productions of high quality renderings Of images that don't yet exist. I think we should make the most of it. By the end of this episode, you will have learned about three of the most popular AI art generators right now. We're gonna get into the benefits and drawbacks of using these AI art tools that seemingly create images out of nothing, but we'll learn about exactly why that's not true.

[00:02:28] You'll learn why AI art has a big chunk of the human art world up in arms and upset, and why another segment of artists and creators are actually enjoying using this new tool. You'll learn the names of the most popular AI art tools on the web, and I'm gonna walk you through several experiments that I perform to generate AI art of toy ideas, and even storybook spreads.

[00:02:56] By the end of this episode, you're gonna know how you could use AI art generation in your toy development process or in your children's book development process, and you may be surprised to know that some people are already doing it. First up, let's talk about the AI tools you need to know. So there are several AI tools right now on the market. There are AI generators that generate AI art, and then there are generators that generate copy.

[00:03:26] I'm gonna cover copy in another episode. Today we're going to talk about AI art. So I've been messing around over the past few months with different AI art generators. So the ones we're gonna dive in today are Midjourney, DALLE 2, and Lensa. In next week's episode, join me to go over AI copywriting tools. Originally, I thought we would do them all in this episode, but as I started writing I thought, whoa, this is a lot of information.

[00:03:54] So for today, let's stick to AI art, and next week we can dive into AI copy. So first up, Midjourney. You may have heard about it over the web, maybe passively in conversations with your friends and maybe you had no idea how to get started, what it was, how it worked. Well, all of that ends today. We're gonna explain everything to you, my friend.

[00:04:16] Midjourney is a beta stage Discord app-based AI image generator that is powered by user prompts. Midjourney can take complex prompts and generate hyper-realistic images in a matter of seconds. Once you sign into Discord and join the Midjourney server. That's it. You are in the app. From there, you need to check out the newbie room channels. There are several newbie room channels, and all you have to do is type in slash imagine. Click on the word Imagine, and then enter a prompt for anything. Well, they do have terms and conditions, so almost anything.

[00:04:57] Now, one of the first things that I tried, it was an idea I had a while back, but never developed, and it was drag Queen Barbie. So what came out of that, a gorgeous RuPaul inspired Barbie doll. I kid you not wearing a stunning red dress. What's cool with Midjourney that I haven't seen on other AI generators is that after you generate an image, you can hit a button below the image to either request a variation of one of the images generated or a more high resolution rendering of one of the images that it has generated.

[00:05:32] When you choose the option to give a variation of an image that they generated for you, the changes are subtle. So for my drag Queen Barbie when I requested a variation on one of the outputs that Midjourney gave me, all I got were these slightly altered facial features.

[00:05:50] So I noticed eyes were further apart in one image. Then in another image there was a removal of a glove on the arm, and then there was an addition of a glove on the arm and a few other small details. I know in this podcast episode I'm going to be describing a lot, so if you want to see the renderings I'm talking about, head over to thetoycoach.com/158 and that's where you'll be able to find all of the renderings that I mentioned in today's episode.

[00:06:18] So Midjourney, really easy to use. They have a how-to channel on their Discord server that explains the process as well. Pretty much similarly to how I broke it down to you, but make sure you read all the terms and conditions before you get started. Now, the second AI tool that we're gonna talk about today is DALLE 2.

[00:06:38] Before Midjourney's rise to popularity. DALLE 2, formerly known as I Crayon, was the AI art tool. It was the one everyone was talking about, but no one was really afraid of it because at first all of the things that would output were pretty, were pretty crude. DALLE 2 is defined as an AI system that can create realistic images and art from a description written in natural language. So to work with DALLE 2, all you have to do is go to the website for DALLE 2. The link will be at thetoycoach.com/158.

[00:07:12] They have a parent company called Open ai, so it's actually on the open AI website. So you'd have to visit labs.openai.com and that will take you to DALLE. Once you sign up, you can start writing prompts in seconds to get a really good result, like a really high res, well done image. DALLE seems to require a little bit more creativity with the language, and they do encourage you and give you ideas for prompts on the page, where you type in your prompts.

[00:07:43] So things like calling out lighting colors, render styles, and even the vibe you might wanna convey, seems almost imperative to generating a truly high quality image using DALLE. So again, with DALLE, I tried drag Queen Barbie and the renderings were really scary. Less than impressive.

[00:08:02] The doll did have a slightly muscular build, a little extra makeup, but honestly, all in all, it looked like a terrible Photoshop job done by a really young kid. I tried the prompt a few more times just to see if the exact same prompt that worked in Midjourney would work in DALLE, but really it didn't it didn't create anything worth looking at, but I will share the image that that prompt got, just so you can see the difference in the two.

[00:08:27] With DALLE 2 you'll see on the main page when you go to the website example of really great renderings that the tool has generated. And if you read the prompts that created those renderings, you'll understand how DALLE requires a bit more information. So one example rendering of little fish graphic fish that's on their homepage. The prompt was 3D render of acute tropical fish in an aquarium on a dark blue background digital art. So that's really the detail you need to apply in order to get a high quality we rendering, which I didn't do in that very first test.

[00:09:04] So now the third AI tool that I wanna talk about today is Lensa. Likely, you've already heard about the Lensa app because you, like me, have seen it all over social media. When this app got popular, that is when I noticed the big uproar in the art community because Lensa's main focus was to create artistic portrait renderings in a variety of artistic styles.

[00:09:27] The Lensa app would give users like 50 to a hundred of photos of artistic renderings of their face done in different angles from different perspectives and slightly different styles for a ridiculously low price. I think the lowest package was something like $1,99. I think the most expensive was $11 at one point.

[00:09:48] And simultaneously, while people are using this app, their AI is getting trained in how to read the artwork that it's pulling this information from. And learn how to create art based on photos, input. So you can find Lensa in your app store, and they have it for Android and for iPhone. So once you sign up, you're gonna have to upload 15 photos of yourself, choose a number of porches of yourself and ask it to generate, you know, however many artistic variations of your face that you want.

[00:10:21] And it's going to use art that the coders, I guess, of this app have fed it to learn artistic styles from. So there are a couple of legal things that we will get into in this conversation, but I do wanna broach them very quickly. So just number one, artists are not happy with the fact that these apps are being trained on artwork that is copyrighted and then said to be creating original art, even when that original art, you know, really mimics their art. And then further, you know, what I found interesting?

[00:10:53] I did see someone had generated a bunch of images of Selena Gomez using Lensa and I wondered about the legalities of somebody uploading images technically of Selena Gomez from Google, putting them into this app and then giving this app the right to use her images and generate quote, unquote, unique art based on the other artwork of artists that has been stolen from the web. It's just an interesting and intricate copyright confusion mess that is being caused right now with these AI tools as far as the photos that you upload into the tool to get information out. And really depending on who's even uploading this photos, the user can now upload photos to train the ai aside from the developers.

[00:11:42] So there's just a lot here. So let's dive more into the issues that real human artists have with AI art. And this is a major point of contention that many artists have. The problem is that AI art uses existing art from the web to train its AI. The difference is that instead of an AI being told to execute a drawing in a certain style, the AI is fed thousands of drawings in a specific style, and then prompts can be used to tell the AI to learn from those thousands of images that it's been fed, and learn the rules of the creation of those images, the visual rules of those art pieces and then apply them to create new art.

[00:12:33] So that's the crazy and scary part. So ever since I've been following AI, I've also seen a lot of posts from artists that are just angered by the use of artistic styles to educate these AI programs because they are not being compensated and they don't have a way to opt out because if their artwork is online, even if it is copyrighted, it seems that these AI are learning from art that is available online.

[00:13:01] Now for example, with any of these apps, you can use prompts that specify a specific style. So for example, you could do a prompt that says, portrait of a Woman in the style of Mondrian. And Mondrian is a popular artist, everyone knows style when you say that you know what to think of.

[00:13:21] And the AI have been trained on many different popular artists so that it can actually execute something when you put in a prompt like that. So I did put in that prompt just to see what it would do, and it does look like a Mondrian. It's crazy. So the real human artist's concern is that over time, you know, the AI will be trained to do this from their art. Yes, Mondrian is, world famous, world known. But the next big artist of today, like modern, famous artists of today, feel they could be next. It could be their names and their art style that the AI is trained to copy.

[00:14:01] And then people can be generating art that they never would create, would be aligned with and just art that they will not get compensated for existing, although it follows the style of their work. And here is like the huge gray area because if we think about it, humans often look at artwork, get inspired and create new art and new products based on what exists.

[00:14:28] The only thing AI is doing is doing it a lot faster because they're doing it faster. They can do a lot more, and sometimes it can look better, and that is where the anger and tensions are arising. So as these programs continue to grow and learn from artwork available online, some artists are fearing that their work will be stolen and then be used as prompts in the app. Some artists are already feeling that the portrait art produced by Lensa already too closely mimics portrait art that they may do. So there's this graphic artist, his name is Zen Edge, can find him over on Instagram, I believe.

[00:15:07] And there was this image that he was said to have created floating around on Facebook. And the image states that AI doesn't actually learn from art, but that it manipulates existing art beyond recognition to appear as though it's new, although it's not actually new. And that's the argument that AI is theft because AI can't visualize without reference art, but a human can.

[00:15:32] However, as I said earlier, there are other articles that state that AI generators are actually learning the rules of the art and they are creating something new, following these rules based on the new prompts. So it's a tricky area. Just wanted to get you up to speed with the sensitivities around AI art before we dive into how incredible of a tool it is. I just wanted to make sure that we start from a place of true understanding of where this art is coming from, how it's made, and we're thinking about that before. We're just diving in and using it.

[00:16:11] So now I wanna get into how AI art can benefit toy companies or toy designers in general in their design development process. Because the reality is this, AI art generation makes things easier. Companies are always trying to find the easier, cheaper, and faster way to get work done. They're always trying to increase profits and output, and it is possible that AI art can provide a solution to that. Now, in the toy design development process, ideation is a huge important step.

[00:16:47] And I've gotta say here, if you want an episode that is a complete breakdown of the toy design development process, and if you want a checklist on toy product development and design, head over to the toy coach.com/six three. I did a podcast episode on the five steps of product development, and there's a checklist you can download, so thetoycoach.com/63.

[00:17:12] Now let's go back to it. Now, it is possible that AI art can help speed up the toy design development process. Ideation is a huge important step with at least one to two months dedicated to a research and ideation. Now imagine if AI art can be used to supplement that ideation time by generating additional concepts while cutting down on the ideation time needed itself. So I wanted to explore that and see if it was possible to do that. I ran a few toy design experiments inside of Midjourney and a couple of experiments inside of DALLE.

[00:17:52] So let's get into what happened. So with Midjourney, my very first experiment was this. I pretended like I was in the ideation phase of a new project and I pretended like I was given the job to design a new project, a sassy but cute seventies inspired baby doll. So normally my ideation process would be this.

[00:18:13] I would Google seventies dolls. Look at those styles, go out to toy stores, look at some dolls in the real world. Then I might venture outside of the industry and start looking at famous models from the seventies era. Take note of their hairstyles, facial features, and of course, clothing. And eventually I'd put that all together on a mood board and I would start sketching.

[00:18:34] Now, that process can take several hours, and if I have to travel to toy stores, it could take a couple of days to plan those trips, go out, see different things. But what if I hopped into mid journey and simply typed in a prompt, maybe a prompt that says something like a sweet and sassy toy doll from the seventies?

[00:18:55] Well, I did that. I tried, and within seconds, I actually got four options of cute and diverse dolls fully rendered in kind of a 3D rendering. So they almost looked real like you could pick them up on the shelf to see my AI rendered dolls head over to thetoycoach.com/158.

[00:19:13] Now depending on the rights that Midjourney gives me for this generated image, I could theoretically just choose one of these AI generated doll designs, quote unquote, and I could continue to illustrate a front, back and side view of that doll cutting my initial ideation process down from hours, potentially days to literally seconds.

[00:19:37] Crazy. So my findings from this first experiment is this, it is possible for us to use AI to ideate different things like doll concepts, and we can make those concepts inspired by something specific, like a specific decade, and even an attitude. Remember I did sassy seventies and then it's possible to use those rendered doll designs as part of your mood board before diving into a full sketch session and plan drawing process of your new doll design, which you'll develop with your factory.

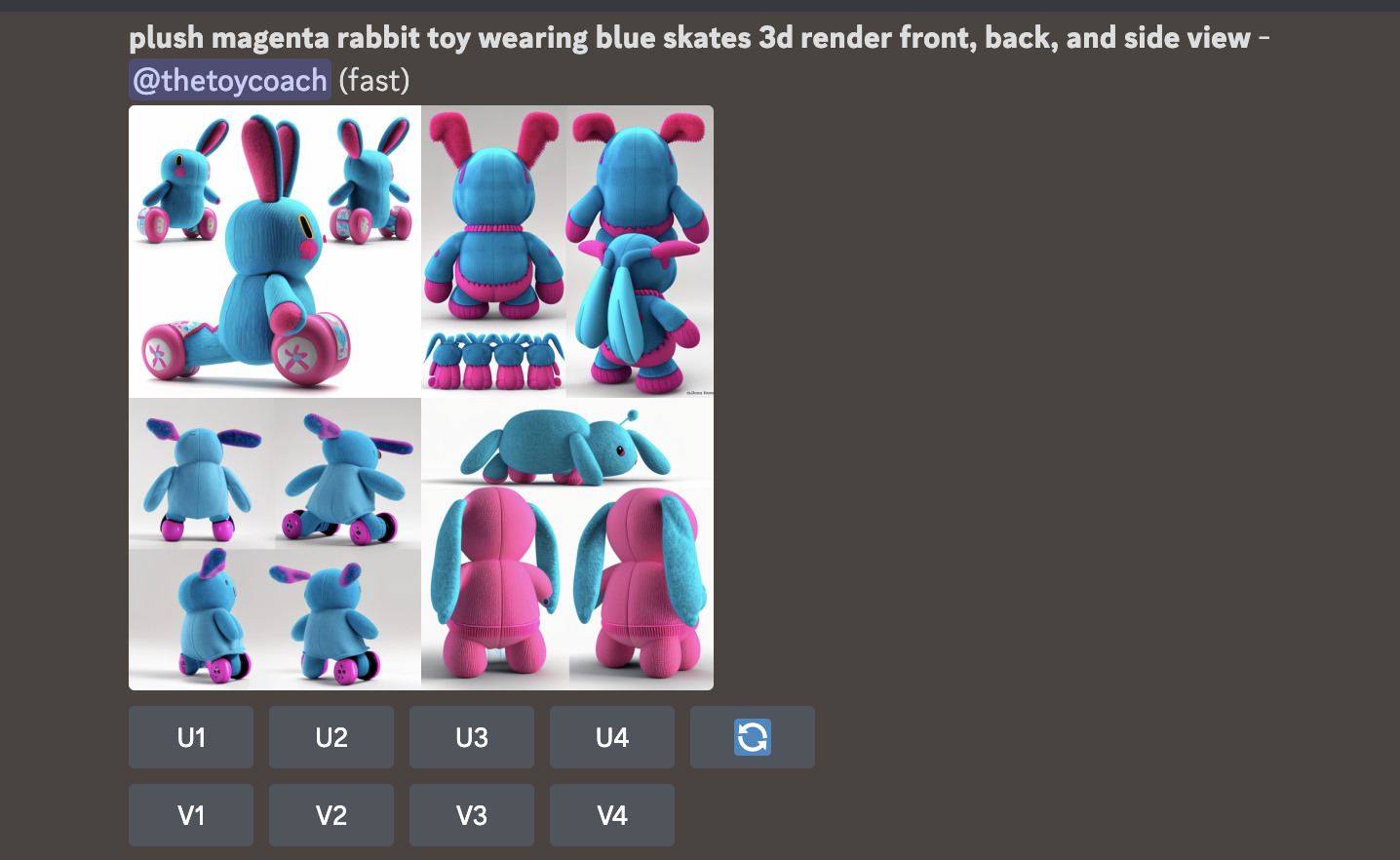

[00:20:11] Now, experiment number two. I wondered what would happen if I used Midjourney to finish even more of my design process. Could Midjourney help me render a front, back and side view of this doll that it generated? Well, sort of. So Midjourney has this ability where you can use any image that is posted online and you can prompt Midjourney to generate a new AI image based on a specific image of your choice. All you have to do is do the whole forward slash imagine thing and add the URL of the image, and then add some prompt information.

[00:20:54] So additional copy for that prompt. So I uploaded one of the dolls that Midjourney had generated, and then I asked Midjourney to give me a front, back and side view of that doll. Literally, the prompt was front, back and side view of that doll, and that doll I was hoping Midjourney would know was the URL that I put right in front of that prompt.

[00:21:17] So the results were actually impressive. I got a front and side view of four different dolls, but they all looked pretty different from my original doll. They were actually little white girls with bouncy curls instead of the doll that I chose, which was this little black girl with an afro. But the style, the seventies style, the color way of the photo was still very similar.

[00:21:41] Even the clothing style was kind of similar. So the full body view, that mid journey generated could work on the initial doll head that I'd gotten from that first generation. So it could work. Again, to see all of my AI render dolls go to thetoycoach.com/158. Findings from this experiment number two.

[00:22:02] When I was in school for toy design, my classmates and I would often ask each other to like pose for photos so we could use those photos for reference images as we drew our toy concept boards and sometimes our plan drawings. Now plan drawings, if you don't know are front, back and side view of a product design that is really useful when you are going to production with your toy.

[00:22:27] So you can either send your plan drawing directly to the factory and then the factory will make a 3D model of your toy. Or if you can do 3D modeling or you're working as a part of a team with a 3D modeler on it, your plan drawing would inform what the 3D modeling artist should create. So your plan drawing has measurements and should be completely accurate to the size of the product and the placement of buttons, and even the design of the clothing, all that. That's what your plan drawing is for.

[00:22:55] So with Midjourney now, it seems that it's possible to use A I to generate your plan drawings. To generate a full body front aside and even a back view of a doll that could be used as your reference when creating your toy concept board or even creating your plan drawing. Now, if you aren't sure what a toy concept board or a plan drawing is, I really want you to head over to toycreatorsacademy.com because this is my one of a kind toy program that will teach you that and so much more about toy business so you can develop and launch your best toy or game ideas yet.

[00:23:32] So if you like that little mini lesson I just gave, then you're going to love Toy Creators Academy. And again, that's toycreatorsacademy.com. Alright, let's keep it moving. Let's go on to the third Midjourney experiment that I ran. So this experiment was inspired by my own toy designs because after running a few prompts like the drag queen Barbie, the seventies inspired doll, and a roller skating bunny rabbit that we don't really need to talk about, cause that looked kind of weird.

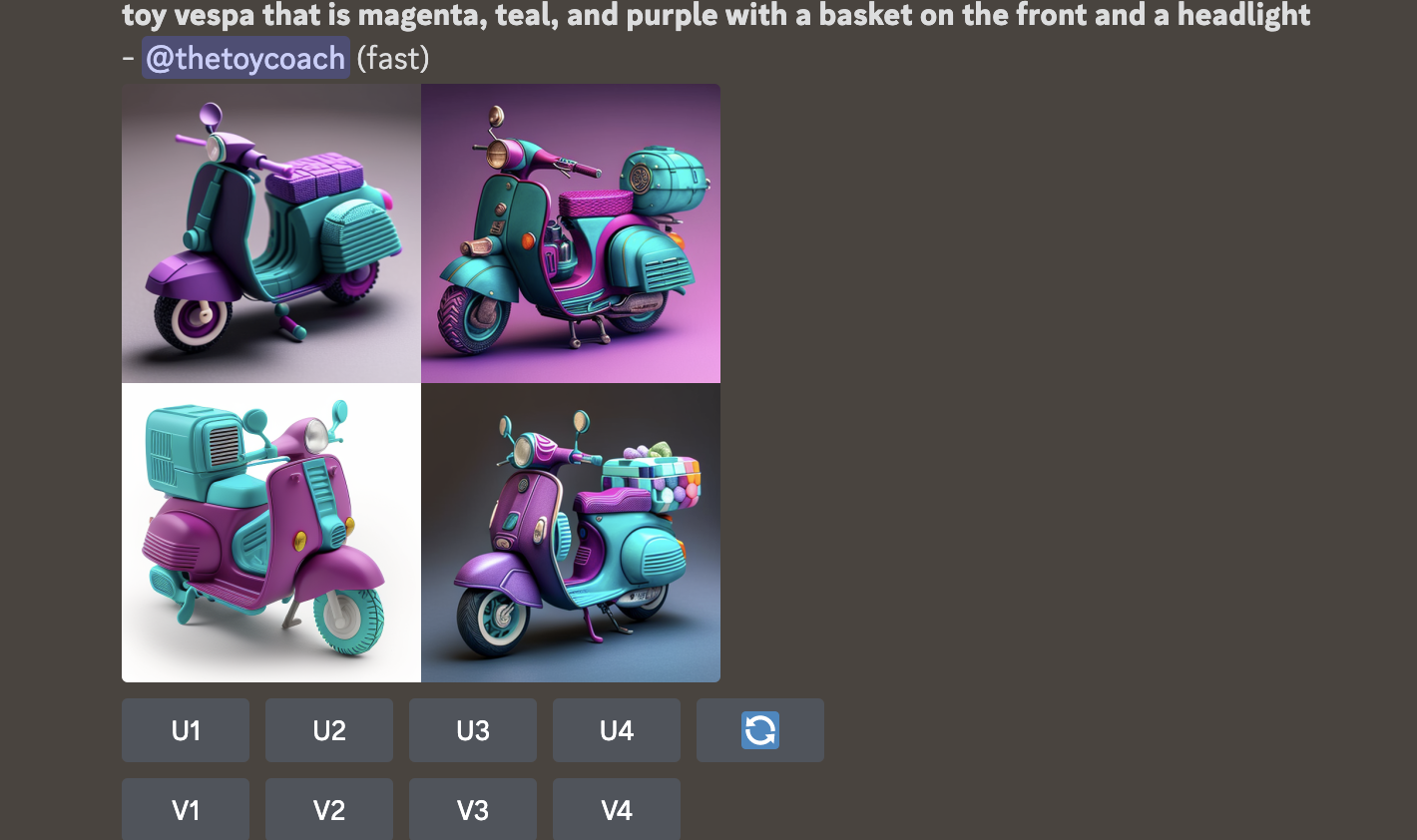

[00:24:01] I started to wonder, Will toy companies still need designers in 10 years or will they just need copywriters to generate prompts or designers for manufacturing to turn these images into actual manufacturable products? Right? So to test that scary, horrifying theory, I decided to try and generate a toy Vespa, and that is a product that I designed for Toys R Us for their journey Girls Doll line.

[00:24:32] So all I did was put in this prompt quote. Toy Vespa that is magenta, teal and purple with a basket on the front and a headlight. That is it. And the AI generation of this toy concept was gorgeous and actually quite close to the one that I had rendered for Journey girls. It clearly didn't take into effect like costing because there was extensive detailing, multiple textures on the seat of the Vespa, on the wheels of the Vespa, on the handles color placement that you just sometimes can't get with toys because when you are developing a toy for manufacturing, certain pieces are in the mold together and the factory will often tell you like, okay, we can make these pieces all the same color, and these pieces all the same color.

[00:25:22] And that's the cheapest option. And if you wanna do something different, you can, but it's gonna cost you more money. Well, these AI generated images just didn't care about any of that. They were using every color everywhere, whatever they wanted. So color placement, depending on how your parts are built in the mold. Not considered. However, these designs were really fresh, edgy, and inspiring, and dare I say, I actually liked some of these generations better than my original design.

[00:25:47] Granted, I was limited by manufacturing ability and cost. And this render is certainly not, it's just a picture, not a full product. But there is a level of detail exploration that I think could have added to my original design without affecting the cost too much, like subtle ridges over the wheel of the bike, really subtle details embossed into the handles. Things that when you are pressed for time as a designer, you don't often have time to dive into or to push the factory to get correctly.

[00:26:21] So that was really interesting. So findings from experiment number three. How can this AI generation of a toy actually be used in the toy industry? I had some ideas, so imagine this. Imagine you're on the sales team, you're sitting in a meeting with a toy buyer.

[00:26:38] You have a great relationship with this buyer, but this season, for some reason, they are not feeling any of the designs or product samples you walked into the door with. You even showed them this new camper van for your ever expanding doll line, a doll line that they love. You were sure they were gonna love this van, but the buyer leans over and tells you, you know what?

[00:26:59] I would really like to see a Vespa for this line. I would love to see magenta, teal and purple Vespa. Those colors are hot. And with our new eco focus clientele, vespers are growing in popularity. I think I'd like to see that in your line. Then imagine you a salesperson logging into mid journey, typing in a prompt, generating a quick image, and saying, what about something like this?

[00:27:24] The buyer's eyes light up. They say, yes, I like that. Can you make that? If you can, I'd be in for about 20,000 units. So then imagine that design goes over to your design and product development team. You attach the image. You say, we need something inspired by this, you give them a retail price point and you tell them you need a prototype in three months.

[00:27:47] Could that happen? I have no idea. But I think it could be a really incredible and powerful way to save a meeting when things aren't going well, to generate concepts without wasting too many months. In development and to get verification of an idea from a buyer before you've wasted your design team's time and your company's resources on developing a prototype.

[00:28:16] I can tell you how often we would develop products for sales meetings and they just wouldn't be right. Had we had a chance to get a more finished version of the product in front of the buyer earlier, we could have had a chance to get better feedback and offer exactly what they were looking for sooner. So I do think there is opportunity for Midjourney to be a part of the corporate toy development process. Now I don't know exactly what that will look like for legal reasons.

[00:28:46] Who owns this artwork? What can we really do with it? But we will talk about that a little bit later in this episode. For now, let's go on to Midjourney experiment number four. So after that experiment, I was really excited but also kind of scared of this whole AI thing. So as a designer myself, I thought, wow, you know, this computer designed a better Vespa than I did.

[00:29:08] Maybe it wasn't a better toy, but it did a pretty cool rendering of what could potentially become a toy. So I started to think how could a designer just use this AI to improve their own work? So what I think you could do is upload an original sketch to midjourney and tell it to make your idea more steampunk or to make your idea have dalmatian spots.

[00:29:31] And I did this. And the output was really just a random amalgamation of steampunk elements like helmets and goggles, and also a recolor of the product to orange and blue. Now, what's really cool about this is for the toy industry, we like to think about how we can expand successful products. So re-theming is often an exercise you'll see in a product that did really well. One year they'll ream it the following year. Now the results from this prompt definitely weren't ready to show to a client or to a buyer, but it can be used as a source of inspiration.

[00:30:09] There were some details that could definitely be used as a source of inspiration for illustrating a steam punk version of a Vespa for a following season. Now, before I ever discovered Midjourney, I'd been spending most of my time toying with DALLE. I'd attempted to render a few plush toys in a doll and Dollhouse.

[00:30:30] And what I've come to discover is that DALLE seems to be better at rendering scenes than it is at rendering products or toys. At least that's what found for now and the prompts that I've given it. So I ran three experiments with DALLE.

[00:30:44] And as we go through these experiments, you'll see these are leaning much more scenic based. And because of that, they might be more useful for someone developing a book or a scene of some sort. So let's go to DALLE Experiment number one. This one starts with a story.

[00:31:01] So back when I studied Toy Design at FIT I created a children's book. It was about 32 pages, and I wrote the entire story, sketched. It digitally rendered every single page for my senior project. Super colorful, highly detailed, a lot of illustrator, a little bit of Photoshop. And the biggest problem that I had was that I wasn't the fastest illustrator.

[00:31:25] It took me a long time to get like proportions and angles, right? So often I would draw out a page and realize that I should have changed the viewpoint for a more powerful image. But since I was slow to illustrate, I never really had time to fix it, and I had to keep it moving. So when I discovered DALLE was better with scenes.

[00:31:43] I started thinking about that storybook because I always wished that I could have chosen different angles. So I started to think about ways that DALLE could be used to render pages of a children's book. So my first experiment was this prompt quote, A black girl with red Afro hair looking up at a tall pile of plush toys with big eyes in a gold room, realistic.

[00:32:07] And the results were really exciting. I had about three great images, which you can find at thetoycoach.com/158 that could be used to inspire a layout for a children's book. Now these images are definitely not final art. The eyes are wonky, things are unfinished. It should not be used as is,

[00:32:29] but it could be used for an incredible reference image for an artist who is going to be illustrating a children's book. I think if I had this when I was creating my storybook, I could have generated different pages of my book, looked at what they kind of looked like and feel like together, chosen the best angle or composition, and then proceeded to fully draw out and design out that page.

[00:32:53] It could have helped me create my book faster by cutting down ideation time and maybe have helped me create a higher quality book. I remember in that book I had some pages where the views were amazing and I was super proud of the angles I chose, and there were other pages that I thought just fell kind of flat. So a tool like this would've been a welcome assistance. To take a look at the images I generated head over to thetoycoach.com/158.

[00:33:21] DALLE, experiment number two. With that thought I had to try one more thing. I wanted to try to generate a view of one of my storybook pages. Yeah, the storybook that I designed years ago. So there is this one page in my storybook, the very first page where the two main characters are rushing toward a store trying to get in before it's closed. Now, I wasn't a pro at using DALLE at the time, so I probably didn't use the best prompt.

[00:33:52] But the prompt I entered was this two young black sisters running down a street toward a shop in a small town road with two cars parked outside, and the resulting images honestly couldn't hold a candle to the first page of my storybook, but maybe I'm biased. It is my art now to see the two images and compare the storybook image that I was trying to replicate my original storybook.

[00:34:17] And then what the AI generator outputted, just check out thetoycoach.com/158. Now I even tried to clarify the prompt to get an image that looked more like the one that I drew. So then I tried this dramatic angle, two young black sisters running down a street toward a shop on a small town road with two cars parked outside digital art.

[00:34:41] But still some of the images that DALLE generated honestly looked like post-apocalyptic. And looking at what I came up with for my storybook, I didn't think that. , even having used these images as a reference, that it would've helped me get to where I got with my original storybook art. In fact, I think that had I had these images to reference, I might have been influenced to go in a moodier direction with my storybook illustration.

[00:35:09] Whereas what I came up with was very like colorful and light. And the images generated by DALLE just felt very sparse and kind of moody. So, experiment number two, findings. We have got to be careful to not let AI limit our own creativity. Sometimes AI's output can be a good source of inspiration, but I think it's important more now than ever that you have a clear vision for the art or the product that you intend to produce before you use AI to help support that process, without that clear direction, you could find yourself being led to design or develop a product whose overall vibe and style is led by AI more than your own creativity and vision.

[00:35:57] It would be smarter of us to instead use AI to generate inspiration, to fuel our creativity instead of allowing it to lead our creativity. I think that's gonna be a really, really important differentiator as AI progresses in our world. Now my final DALLE experiment number three. Once I realized DALLE's strength was in scenes and not in individual product generation, I wanted to try one more thing to see if it could generate a toy.

[00:36:29] So I asked my best friend to give me an idea for a toy product, and I plan to describe her toy within a scene to DALLE to get it to properly generate it. So my friend came up with a glow in the dark, lightning bug plush. So I reformatted that into a prompt that said, photo of a little girl sleeping in a dark room with a plush toy firefly that is lit up and it worked.

[00:36:56] The resulting photo would be perfect inspiration for what we call a call out on a package. Now a a call out on a toy package is an image that shows your toy in use by your ideal target market or your ITM. Again, if you wanna learn more about all that stuff, head over to toy creators academy.com.

[00:37:16] I would love to teach you more inside of my program, Toy Creators Academy and the design of the toy that DALLE outputted actually wasn't too bad either. It was a pretty simple yellow firefly with a long body and a small head and a transparent wing, but it did have some funky lights. It almost looked like it had polka dot spots all over its body that were the lights that lit up.

[00:37:40] To check out that image again, head over to thetoycoach.com/158. pose for reference photos. We've got AI for that. Now, DALLE seems to be a great tool for getting poses of kids or even adults holding toy products or things that can be easily translated into images for product presentation decks, concept boards, and even used as reference photos for product photo shoots. So my final experiment findings from my last dolly experiment is this. Toy designers no longer need to ask their friends or family to stand in and pose for reference photos.

[00:38:20] We've got AI for that. Now, DALLE seems to be great for getting poses of kids holding toy products and those photos can be either used as reference photos to illustrate call outs on product concept boards, or they could even be used as reference photos during a product photo shoot for your toy. You can say, I wanna set up an image or a scene that looks something like this. So they're really a way to cut down the planning and ideating portion for scenes in which you wanna showcase your product.

[00:38:55] So the last thing we've gotta talk about today is the legal stuff. While I've been going through all the cool things you can do with Midjourney and DALLE and Lensa, you might be wondering, is it safe to use these tools? Is it safe to upload your concept sketches to AI tools to get them to reference it? Who actually owns the input and the output art? Could it pose legal troubles for the companies that use these platforms? And can I actually create a product based on something that was generated by ai? What is the gray area for that?

[00:39:29] Well, I am not a lawyer, so this is not legal advice. So I would recommend before you do anything with AI on your toy product that you do your homework. Now, the use of AI artwork in the development of toy design is definitely going To Come from the top of the company that you work for, the company would need to have its own account. So the company's account would be the one that would be generating these images so that everything can be monitored and the terms can be legally reviewed and there would have to be clear company rules on how those images are approved to be used in the toy development process.

[00:40:09] Let's talk about the legalities and the privacy of just Midjourney. It is great artwork that comes out very quickly and is pretty affordable. But the problem here is we don't have privacy in the beta version of Midjourney, which gives you 25 prompts to test out the program with every prompt and resulting image are available for the public to see and further can be used to develop the AI of Midjourney itself. So here's where things get really interesting. If you read Midjourney's terms and Conditions, it gives a creative commons non-commercial license to free users. So you can use the free images that are generated and share them as long as you're not selling them. So the question becomes, if you're a creator generating a free AI image, do your risk getting yourself in trouble.

[00:40:59] Even just using those AI images as reference. What if the final toy that you produce looks too close to the rendering? And then aside from that, what toy inventor, entrepreneur or corporate toy designer would want the world to see their Midjourney prompts and AI generations? Because those sources of inspiration are essentially proprietary. And we don't want our toy designs out there before the product is complete. Right?

[00:41:26] So yes, it's clear the uncertainty of copyright and ownership is a major drawback to any AI art generator. Not just Midjourney, but Midjourney does have some option that may offer an answer or a solution to that problem. So you can become a paid subscriber of Midjourney and being a paid subscriber offers you the option to use the AI generation bot in your direct messages in Discord instead of a public channel with other people.

[00:41:58] And then the images that you make within your direct messages are still subject to the content and moderation rules that all images made through Midjourney are. And I've read that they are still visible on your Midjourney website gallery. If you don't want your images to be on any website gallery online Midjourney has a paid option for that, where you can have something called Stealth Image Generation Mode, which is included in their top tier commercial plan.

[00:42:30] The commercial plans for Midjourney are actually quite affordable. They start at just $10 a month, and the top plan is $60 a month. So if you wanna check out Midjourney, full terms and conditions, and I suggest you do go to midjourney.com, scroll down, look for their terms of service. I'm gonna throw the link in the webpage for this episode.

[00:42:54] Now, DALLE terms are much more vague. Their terms defines your input into their program and their output, both as what they call quote unquote content, and they provide you the right title and interest to that content. But what that really means is kind of up for debate. It also states that you're responsible, that your content doesn't violate any law. Which is interesting, considering that their service is actually what generates that content. The use of images output by DALLE is very vague, which is probably why this one also is such a contention point today for artists.

[00:43:34] Now, if you have a copyright claim there is a place where you can reach out to the creators of DALLE at least. All you have to do is go to openai.com/terms, and if you scroll down, you'll find the email and a physical mailbox location of where you can send a complaint if you feel like your copyright has been infringed upon.

[00:43:55] By this point you might be wondering, Azhelle, are people really using AI in their design development process? Is this just an idea you have or is this something that is actually happening? Well, it is both. It's an idea I had, but it's also something that is happening.

[00:44:11] I have a few examples for you of how AI art is currently being used in the design development process of, in my knowledge, puzzles and games. A colleague of mine who works with a puzzle designer shared that the designer uses AI to generate scenes, perspectives and different compositions as part of her ideation research. And that process using AI has been cutting down her concept development time and thus cutting down her production time of new puzzle artwork from a month to a week.

[00:44:48] The second use case of AI that I've seen are independent board game designers in a board game design group that I'm a part of. Designers are using the app to generate packaging, artwork, concepts for their board games. The example I saw was a digital art rendering of a person standing in a forest, and the designer applied that digital art rendering to the front of a box, and then asked for feedback to people in the group to see what they thought of the box design. Only later revealing that that box design was actually created by ai.

[00:45:23] The third use case of AI art to improve the development process of the game I've found is by the co-creator of the game Hissy Fit. His name is Chris Stone and in a group I'm a part of, he shared that he's working with an artist whose process involves drawing from photos. They draw from reference photos, but because the copyright status of illustrations that are created from copyright photos is complicated, they've only been using original images that they take themselves of cats so that they can use it in their game, hissy fit, or photos that they can find in the public domain.

[00:46:02] Now, when they can't find exactly the right image, AI has allowed them to create public domain imagery from which their artists can produce. Content for their game in their original artist style. Now, if you wanna learn more about the hissy Fit game, I would really love to ask you to support Chris's Kickstarter over@hissyfitgame.com.

[00:46:26] Before I jump into my summary of today's episode, I'd like to take a quick break and give a shout out. And thanks to Chris Stone, co-creator of Hissy Fit Game. Thanks for chatting with me online about ai. I hope your Kickstarter will be a huge success. Anyone listening to this episode, please visit hissyfitgame.com to support.

[00:46:48] Let's dive into our conclusion. Now AI is a tool that has been gaining in popularity faster than copyright. Laws can keep up with it. In my opinion, it's important that designers and artists understand how it works and consider the possibility of using it to speed up design processes. However, in doing that, we have to be aware of the legalities of using AI art and make sure that it is just inspiration and not replacing the design process entirely and at least for now, it doesn't seem to be able to.

[00:47:23] When we think about how AI will change the way that creative fields function , I couldn't help but come to the realization that there is a new king in this world. How we always used to say content is king. I think it's safe to say that data is the new king because without data, these AI generators wouldn't be able to do what they're doing.

[00:47:48] Sites like Google, LinkedIn, Instagram, Facebook have been collecting data on us for decades. And typically their data is looking at what we're currently interested in, what we've done in the past, where we've gone and they're using all that data to gather and infer what they think we will want so that they can create products that we will purchase or will need and market them to us.

[00:48:14] Right. Well, I'm coming to this conclusion that AI apps like Midjourney and DALLE are gathering data of what we think. Right? So instead of just gathering data of what we're engaging with, these apps are gathering data of what we think, what we're imagining, what we want to see, but we don't see what we visually like and what we wanna see more of.

[00:48:38] How powerful could data like that be when combined with existing data that companies already have on us and what we currently engage? As we look ahead, we can see that a digital era of visual excess is probably on the horizon. While in our physical world, we are experiencing inflation. We are on the precipice of maybe a recession, and that might lead to simplification in our homes and the types of products that we buy. But while all that's happening, the cost of high quality art creation seems to have gone down.

[00:49:22] So paintings, hanging in homes, art and books, art and video games, all that can get more complex because while we're saving time and ideation, we can use that time in execution. Okay. Enough thinking about what is possibly to come, let's get into your action item to do for next week.

[00:49:43] So I want you to send me a message on Instagram and tell me if you use AI art in your creative process. You can find me at the Toy Coach. If you do share how you use it. If you love this podcast and you haven't already left a review, what are you waiting for? Your reviews mean the world to me.

[00:50:01] I get a notification on my phone each time a new one comes in, and they keep me motivated to come back week after week. Wherever you're listening to this podcast, I would appreciate it so much if you went into your podcast app looked for where you can write a review and wrote a positive review for the show.

[00:50:21] As always, thank you so much for spending this time with me today. I know your time is valuable and that there are a ton of podcasts out there, so it means the world to me that you tune into this one. Until next week, I'll see you later toy people.

-

🎓Learn more about how you can develop and pitch your toy idea with Toy Creators Academy® by clicking here to visit toycreatorsacademy.com and join the waitlist.

Not ready for the Toy Creators Academy online course? Start by connecting with fellow toy creators inside our online community. Click here to join.